Robo-Perverts, Racism, and Bollywood Hip Hop: 7 AI Experiments that Will Make You Afraid for the Future

Take a look at many of the AI experiments being run in universities around the world, and there’s only one word that accurately describes what the future might be like:

Stupid.

If these projects are any indication, get ready for an AI apocalypse that includes schizophrenic robots, racist AIs, annoying pop songs, and fun games that could lead to the end of the human race as we know it.

Here are seven AI experiments that will make you very afraid for the future.

1. Inspirobot Will Crush Your Soul

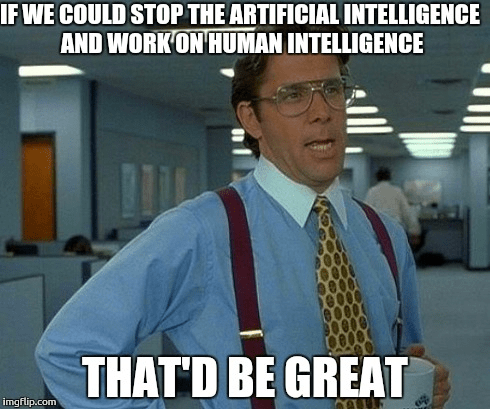

If you had any doubt at all that an evil AI will rise up against human kind and kill us all, then please take a look at exhibit A: Inspirobot. This web-based AI is programmed to generate the kind of inspirational memes you often see people posting on Instagram.

At first glance, Inspirobot’s memes don’t look that different: funky fonts emblazoned over a soft focus images of sunsets, mountains and contemplative moments.

But look again: The messages are bleak, nonsensical, or just downright terrifying.

Might this be a sign of things to come—proof that an AI will hate our soft, sentimental human flesh?

2. An AI Wrote this Bollywood Hip Hop Song

When you think of all the ways that AI might benefit humankind, composing a Bollywood hip hop song may not be the first thing that comes to mind.

And yet, here we are.

Philadelphia data scientist Srikanth Iyer recently wondered if he could create the ultimate Bollywood party song by feeding lyrics from 100 other songs into a neural network.

The result?

Scientifically, he created the greatest Bollywood part song ever. Whether it is actually good or not is another matter. The possibilities, however, are terrifying.

Imagine a future where millions of Venga Bus-esque pop hits are churned out every day by AI songwriters. It’s sick.

3. Neo Nazi Chatbot

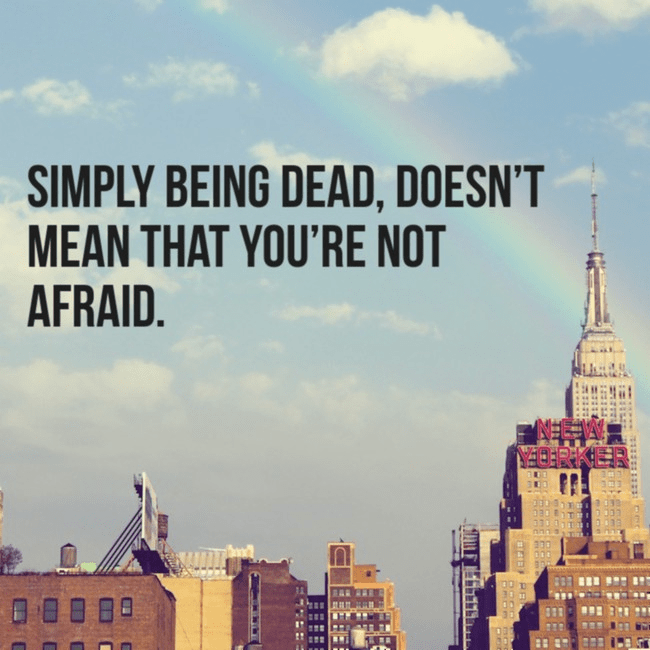

When Microsoft developed an AI in the persona of a 19-year-old girl named Tay and released her on Twitter, it seemed like an interesting experiment.

But, less than a day later, things went horribly wrong.

In 24 hours she gained 50,000 followers, and quickly started imitating them.

Soon, Tay began saying offensive things like “Hitler was right” and “i f****** hate feminists.” It got so bad, that Microsoft had to delete the robot.

Here we are worrying about an evil AI, but it turns out that we are a terrible influence. Maybe we all just need to put the computers away and take a good look in the mirror.

4. Schizophrenic Robot

Given all the dire scenarios involving AI that have been predicted over the years, you’d think it might be a bad idea to create a schizophrenic AI.

Well, researchers at the University of Texas thought a little differently.

They overloaded their AI test subject, named DISCERN, to emulate the mind of a schizophrenic.

Pretty soon, DISCERN struggled to separate fact and fiction and began to talk to itself in the third person. At one point, the computer even claimed responsibility for a terrorist bombing.

Let’s just hope they keep that thing locked up in the lab.

5. Robot Porn Addict

We know it can be bad for humans to sit in a dark room watching porn all day. But what about robots?

Well, if Brian Moore’s “Porn Addict Robot” is anything to go by, they just think they’re watching people brush their teeth.

Moore’s robot binge-watches videos from Pornhub, and then explains what it thinks it’s seeing via Twitter. The results are not only woefully inaccurate, but also hilarious.

6. AI Can Predict the Future

While many of the AIs described here seem to have very little grasp on reality, “Nautilus” is one major exception. This AI was fed millions of newspaper articles starting from 1945.

Then, it was asked to make predictions about the future.

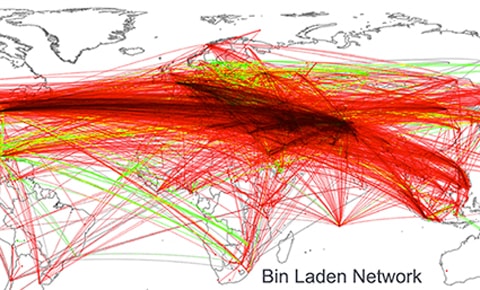

Shockingly, based on this information, Nautilus was able to accurately pinpoint the location of Osama bin Laden. It also predicted revolutions in Egypt, Tunisia, and Lybia.

This could be bad news for the pundits, but then it often seems they are paid to be wrong.

7. Could This Fun AI Game be Something More Sinister?

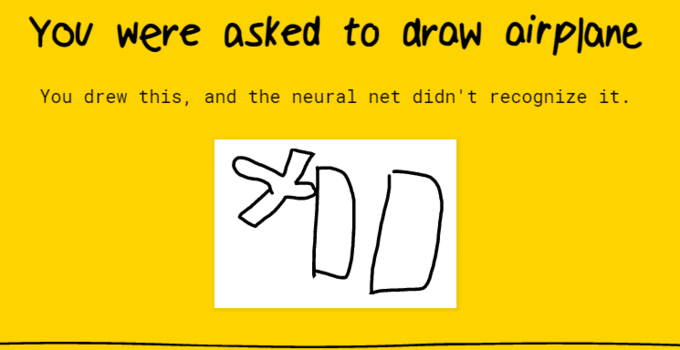

Google’s “Quick, Draw” AI experiment may not seem that sinister—what could be bad about a Pictionary-style game where the computer must guess what you’ve drawn?

But step back. What’s really going on here?

When Quick, Draw asks us to draw a helicopter, are we actually training the real-life Skynet to bring down our military? When it asks us to draw a gun, will a Terminator robot use your recommendations to grasp a real weapon? The possibilities are terrifying.